Developing Your AI BS Detector

Introduction

I gave a talk at MaRS on this topic. The event was put on by Steve O’Neil and his team, who all did an excellent job. The venue was packed to standing-room only with a fantastic audience of 300-400 people.

The goal of the event was to have a discussion around “Rational AI in the Enterprise.” I think all of the speakers did a wonderful job of honoring the topic. We wanted to present the facts as they are, on the ground, in real-world projects and situations.

My talk was aimed at a mixed audience of technologists, investors, and executives. The goal was to instill a sense of skepticism when considering if and when a given business problem dictates an AI solution, and also to instill some confidence when it comes to having a conversation with someone pitching an AI solution to a business problem.

If you want to skip ahead, you can download the slides directly. I will be referencing selected slides by section below.

Overview

The plan for the talk is to first provide some of my background in order to share the ways in which my experiences have shaped my perspectives on AI as a topic. Then, I’ll give an example of a pitch for a fraud detection AI that I developed specifically for this talk. In closing, I’ll discuss some ways to separate wheat from chaff when it comes to AI projects and solutions.

Background

My career has spanned a wide range of technical and leadership roles over a variety of industries, including e-commerce, financial services, and healthcare. (I expanded further on this during the talk, but you can see my About page for more details.)

AI for fraud detection

This is an example of a fraud detection AI solution I had developed for the lecture. I explained the problem domain as fraud detection for e-commerce or other electronic transaction services. I also described my “co-founder,” who is someone I’ve worked with for many years on a variety of projects and who is, in their own right, an amazing technologist with an extensive background in high-performance machine learning from an applied perspective. My co-founder is also classically trained in theoretical computer science. The goal of this section was to communicate that the founding team is credible and enticing from an investment perspective.

Our AI

This slide describes some facts about the AI solution my co-founder and I developed. All of the items on this slide are true. The system is proprietary, for performance reasons. It is also proven in real-world applications, since I’ve helped advising clients who have been using similar systems to great success. Lastly, the system is extremely high performance and very scalable. The system can handle all the traffic on VisaNet for about $20 per month in operational costs.

The goal of this slide was to provide sufficient buzzword bingo, and reference exciting tools and technologies.

The system is shown on the next slide (yes, it fits on a slide).

(next slide)

This slide is the code for the system, of which the main part is only the following 6 lines.

func isFraud(ccIsForeign bool, amount float64, transactionsToday int) bool {

if ccIsForeign && amount > 1000 && transactionsToday > 5 {

return true

}

return false

}

The point of this slide is to show that although my claims were all factually correct, viewing only the code for the AI system in question would not get anyone excited from an investment perspective. The AI solution and my mock-pitch were constructed purely to demonstrate how people can be taken in by AI hype.

AI is a PURSUIT, not a THING

AI, in practical terms, is the pursuit of human-level intelligence by machines. It isn’t a single thing you build, or a product, or a solution of some kind. It is the process of trying to build increasingly smarter systems in order to free up the time and energy of humans to focus on more important and rewarding aspects of existence.

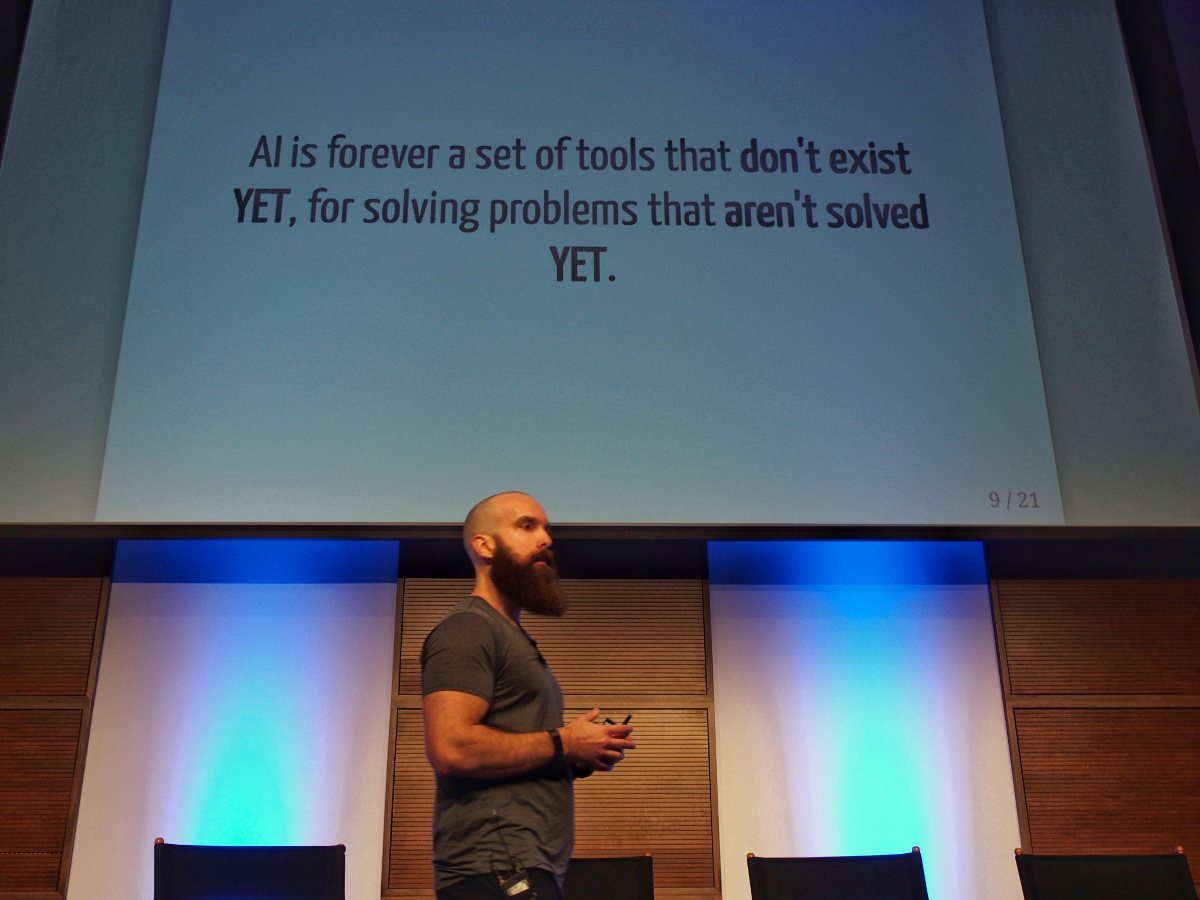

AI is forever a set of tools that don’t exist YET, for solving problems that aren’t solved YET

This is a different take on the classic statement that “AI is whatever hasn’t been done yet.” Though the idea of a technology may be considered AI, once that technology is realized, people stop calling it AI. There are countless examples of this, such as optical character recognition, facial recognition, chess playing, and many more. These things aren’t really considered to be AI anymore, they’re just things your smartphone can do.

This is an important point because it completely disarms claims by any technology team or company trying to sell you an AI solution to a business problem. They might have a solution, but calling it AI is just window dressing. It’s probably just a good solution to a business problem.

AI is done, to the extent it is done at all, by research teams not product teams

Since AI is the pursuit of technology capable of a more advanced level of intelligence, it is almost by definition a research activity. This makes it an endeavour wholly unsuited to almost every growth-stage startup, or large enterprise, excepting the few that have an actual research department.

A team at a growth-stage tech startup that is working on building a product is generally not building any AI which hasn’t been built already. They are, or they should be, using established tools, frameworks, algorithms, and other proven knowledge in order to build a product to satisfy a customer demand. Efforts to advance the current state of AI research are simply a distraction for a startup that builds products. AI isn’t necessary in order for the startup to provide a great experience and tons of value for customers.

(next slide)

This slide is a list of some things I’ve seen during my career in tech thus far. They’ve all had similar hype trains associated with them. Some have now gone out of fashion.

The thing to note is that these buzzwords are extremely vague, and seem to be getting more vague as time progresses. While Business Intelligence efforts seemed to have a rather concrete scope (including creating reporting systems and aggregating data in order to allow humans to make better decisions), AI by contrast is almost completely nebulous. It will accomplish everything, solve everything, and advance everything, resulting in some utopian or dystopian future. Vague claims are a dead giveaway that the hype is out of control.

An anecdote on fraud, AI, and rules-based systems

I was once contacted by a venture capital firm who asked me to pay a visit to one of their portfolio companies. They wanted me to help the company make some progress on scaling their technical team, and to provide guidance on some particularly difficult technical problems they were trying to solve. Along the way, I spoke with one of the executives who was very keen on improving their fraud detection system.

The executive was convinced that their current system, designed around rules for flagging transactions that were likely fraudulent, was archaic, and that they needed some AI and Data Science to achieve more effective fraud detection. With that in mind, the executive had approved a budget for hiring an entire Data Science team, and that team had now been on site for the last four months working on the problem of developing this new and wonderful system.

I went to speak with the team in question and asked them how they were progressing. The news was not good. Unfortunately, they were having difficulties in improving the accuracy of the fraud detection system they were building. They had tried numerous algorithms, data preprocessing techniques, and other strategies. They were simply stuck, and the pressure from the executive to produce a new Data Science and AI-based fraud detection system was getting worse.

I asked them what they were using for training data for the new system.

They said they were using the labeled output data from the current rules-based system. /facepalm…

Of course the new system wouldn’t be any more accurate! In fact, it wouldn’t even be as good as the current rules-based system, since any machine learning approach to that problem is only emulating the output of the rules-based system in the first place.

The executive seemed to think that a rules-based system was horribly outdated and that AI was needed. He seemed to be unaware that many (most?) of the AI systems handling things like fraud detection or credit risk decisions are rules-based. The executive completely bought into the hype surrounding AI, and it cost the company a large sum of time and money that would have been much better spent elsewhere.

Some BS Detector questions

What specific problem are you solving?

This is usually the first question I ask in a variety of situations, because it is often the case that people start trying to solve a problem before they have fully specified what it is they want to solve. When dealing with a nebulous or poorly-specified problem, it is often the case that people try to solve it with a nebulous approach, like “building an AI.”

In order to guard against this, and also to facilitate the rest of the discussion, it’s important to have a clear understanding about what the problem actually is. Additionally, once the problem has been specified, it’s almost always revealed to be a business problem with known effective solutions rather than something requiring a new and novel technical approach. These business problems, and the companies who solve them, are almost always built on domain expertise, a professional network, focused objectives, and persistence - not AI magic.

What is the most naive solution? Did you try that?

Once the problem has been clearly specified, it’s best to try the simplest or most naive solution first. If that isn’t possible, there should be a good reason. Most technical problems that growth-stage startups need to solve are well addressed by textbook solutions. There are often three or more ways to solve these common technical problems that are known, easy to implement and maintain, and proven to work across a wide variety of scalability phases.

As an example, if you’re doing some kind of bin packing or knapsack problem, you can consider greedy heuristics, dynamic programming, and least-cost branch and bound. Those tools enable coverage of different sizes of data sets, and you can make intelligent decisions on the important of accuracy versus runtime performance. Developing some kind of AI packing prediction system is wholly unnecessary.

What about the next-most-naive?

Sometimes people will deliberately attempt the most naive solution with full knowledge that it will fail, simply so they can justify using or building the more exciting and fancy AI solution. A company can save a lot of time and money by instead considering the next-most-naive solution.

As mentioned above, many problems have solutions that work well across a wide variety of scalability stages. In the knapsack problem example, you can solve for small item sets relatively quickly and optimally with a dynamic programming approach. If you have more data and the dynamic programming solution is no longer appropriate, the answer is not to leap to some AI-based solution for knapsack problems, but rather to consider the next-most-naive solution.

Why is what you’re doing considered AI?

This is, intentionally, a bit of a trick question. If someone is trying to sell you on investing in a project or company and they’re playing up the AI aspect of it, ask them why the whole thing is considered AI in the first place. If they’re solving a valuable business problem, and happen to be using some new and interesting technology to do it, it’s potentially an interesting investment. However, if they just like the idea of AI and are using it as a solution in search of a problem, then that’s probably a very dangerous investment and should be avoided.

Are you using a framework?

If so, why is your solutions defensible?

If not, explain why you aren’t wasting time?

As above, this one is also somewhat of a trick question. Recall that AI is done, to the extent it is done at all, by research teams. A product team at a growth-stage startup or a development team in a larger organization probably isn’t outfitted to be focusing on advancing the forefront of human knowledge in AI. Therefore, they should probably be using existing frameworks and tooling, allowing them to spend valuable time solving necessary customer and business problems in a manner that is efficient to operate and maintain. Building a custom AI framework takes time and resources away from those goals, but is often touted as a selling point when the person doing the touting is trying to play up how novel their solution is.

Lastly, some companies will make the argument that because they’re using an AI framework to solve business problem X, that their solution is valuable because of the AI component. WRONG! The solution must be valuable in its own right! The customers who want business problem X solved do not care about the manner in which it is solved, so long as the solution works and is maintainable. The business problem is what is important, not the fact that an AI framework was used to solve it.

When you hear AI, be suspicious.

Above all, be suspicious. When someone says AI, they may be solving a very important problem… or they may just want your money.